☑ That's Good Enough

☑ The State of Python Coroutines: Python 3.5

I recently spotted that Python 3.5 has added yet more features to make coroutines more straightforward to implement and use. Since I’m well behind the curve I thought I’d bring myself back up to date over a series of blog posts, each going over some functionality added in successive Python versions — this one covers additional syntax that was added in Python 3.5.

This is the 4th of the 4 articles that currently make up the “State of Python Coroutines” series.

- The State of Python Coroutines: yield from Fri 10 Jun, 2016

- The State of Python Coroutines: Introducing asyncio Thu 16 Jun, 2016

- The State of Python Coroutines: asyncio - Callbacks vs. Coroutines Tue 5 Jul, 2016

-

The State of Python Coroutines: Python 3.5 Wed 13 Jul, 2016

In the previous post in this series I went over an example of

using coroutines to handle IO with asyncio and how it compared with the same

example implemented using callbacks. This almost brings us up to date with

coroutines in Python but there’s one more change yet to discuss — Python 3.5

contains some new keywords to make defining and using coroutines more convenient.

As usual for a Python release, 3.5 contains quite a few changes but probably the biggest, and certainly the most relevant to this article, are those proposed by PEP-492. These changes aim to raise coroutines from something that’s supported by libraries to the status of a core language feature supported by proper reserved keywords.

Sounds great — let’s run through the new features.

Declaring and awaiting coroutines¶

To declare a coroutine the syntax is the same as a normal function but where

async def is used instead of the def keyword. This serves

approximately the same function as the

@asyncio.coroutine decorator did previously —

indeed, I believe one purpose of the decorator, aside from documentation purposes,

was to allow async def routines to be called. Since coroutines are now a language

mechanism and shouldn’t be intrinsically tied to a specific library, there’s

now also a new decorator @types.coroutine that can be

used for this purpose.

Previously coroutines were essentially a special case of generators — it’s important to note that this is no longer the case, they are a wholly separate language construct. They do still use the generator mechanisms under the hood, but my understanding is that’s primarily an implementation detail with which programmers shouldn’t need to concern themselves most of the time.

The distinction between a function and a generator is

whether the yield keyword appears in its body, but the distinction between a

function and a coroutine is whether it’s delcared with async def. If you try

to use yield in a coroutine declared with async def you’ll get

SyntaxError (i.e. a routine cannot be both a generator

and a coroutine).

So far so simple, but coroutines aren’t particularly useful until they can

yield control to other code — that’s more or less the whole point. With

generator-based coroutines this was achieved with yield from and with new

syntax it’s achieved with the await keyword. This can be used to wait for

the result from any object which is awaitable

An awaitable object is one of:

- A coroutine, as declared with

async def. - A coroutine-compatible generator (i.e. decorated with

@types.coroutine). - Any object that implements an appropriate

__await__()method. - Objects defined in C/C++ extensions with a

tp_as_async.am_awaitmethod — this is more or less equivalent to__await__()in pure Python objects.

The last option is perhaps simpler than it sounds — any object wishes to be

awaitable needs to return an interator from its __await__() method. This

iterator is used to implement the funamental wait operation — the iterator’s

__next__() method is invoked and the value it yields

is used as the value of the await expression.

It’s important to note that this definition of awaitable is what’s required of

the argument to await, but the same conditions don’t apply to yield from.

There are some things that both will accept (i.e. coroutines) but await

won’t accept generic generators and yield from won’t accept the other forms

of awaitable (e.g. an object with __await__()).

It’s also equally important to note that a coroutine defined with async def

can’t every directly return control to the event loop — there simply isn’t the

machinery to do so. Typically this isn’t much of a problem since most of the

time you’ll be using asyncio functions to do this, such as asyncio.sleep()

— however, if you wanted to implement something like asyncio.sleep() yourself

then as far as I can tell you could only do so with generator-based coroutines.

OK, so let me be pedantic and contradict myself for a moment — you can indeed

implement something like asyncio.sleep() yourself. Indeed, here’s a simple implementation:

1 2 3 4 5 | |

This has a lot of deficiencies as it doesn’t handle being cancelled or other

corner cases, but you get the idea. However the key point here is that this

depends on asyncio.Future and if you go look at the

implementation for that then you’ll see that __await__()

is just an alias for __iter__() and that method uses yield to return control

to the event loop. As I said earlier, it’s all built on generators under the

hood, and since yield isn’t permitted in an async def coroutine, there’s no

way to achieve that (at least as far as I can tell).

In general, however, the amount of times you would be returning control to the

event loop is very low — the vast majority of cases where you’re likely to do

that are for a fixed delay or for IO and asyncio already has you covered in

both cases.

One final note is that there’s also an abstract base class for awaitable objects in case you ever need to test the “awaitability” of something you’re passed.

Coroutines example¶

As a quick example of await in action consider the script below which is

used to ping several hosts in parallel to determine whether

they’re alive. This example is quite contrived, but it illustrates the new

syntax — it’s also an example of how to use the asyncio subprocess support.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 | |

One point that’s worth noting is that since we’re using coroutines as opposed

to threads to achieve concurrency within the script1,

we can safely access the results dictionary without any form of locking and

be confident that only one coroutine will be accessing it at any one time.

Asynchronous context manager and iterators¶

As well as the simple await demonstrated above there’s also a new syntax for

allowing context managers to be used in coroutines.

The issue with a standard context manager is that the __enter__() and

__exit__() methods could take some time or perform blocking operations -

how then can a coroutine use them whilst still yielding to the event loop

during these operations?

The answer is support for

asynchronous context managers. These work

in a vary similar manner but provide two new methods __aenter__() and

__aexit__() — these are called instead of the regular versions when the

caller invokes async with instead of the plain with

statement. In both cases they are expected to return an awaitable object that

does the actual work.

These are a natural extension to the syntax already described and allow coroutines to make use of any constructions which may perform blocking IO in their enter/exit routines — this could be database connections, distributed locks, socket connections, etc.

Another natural extension are

asynchronous iterators. In this case objects

that wish to be iterable implement an __aiter__() method which returns

an asynchronous iterator which

implements an __anext__() method. These two are directly analogous

to __iter__() and __next__() for standard iterators, the difference

being that __anext__() must return an awaitable object to obtain the

value instead of the value directly.

Note that in Python 3.5.x prior to 3.5.2 the __aiter__() method was

also expected to return an awaitable, but this changed in 3.5.2 so that

it should return the iterator object directly. This makes it a little fiddly

to write compatible code because earlier versions still expect an awaitable,

but I strongly recommend writing code which caters for the later versions —

the Python documentation has a workaround if necessary.

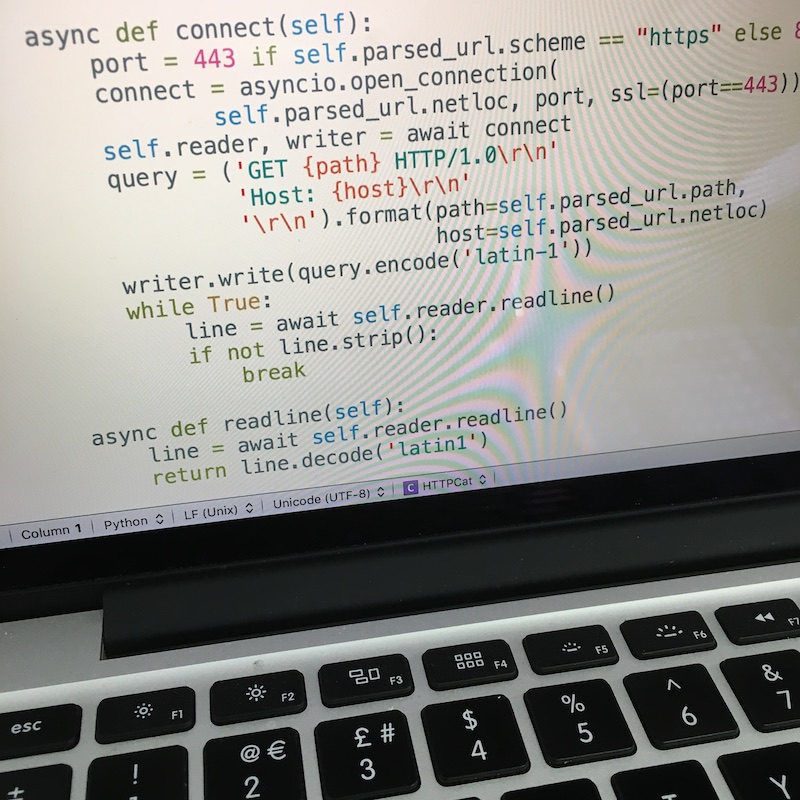

To wrap up this section let’s see an example of async for — with apologies

in advance to anyone who cares even the slightest bit about the correctness

of HTTP implementations I present a HTTP version of the cat utility.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 | |

This is a heavily over-simplified example with many shortcomings (e.g. it

doesn’t even support redirections or chunked encoding) but it shows how the

__aiter__() and __anext__() methods can be used to wrap up operations

which may block for significant periods.

One nice property of this construction is that lines of output will flow down as soon as they arrive from the socket — many HTTP clients seem to want to block until the whole document is retrieved and return it as a string. This is terribly inconvenient if you’re fetching a file of many GB.

Coroutines make streaming the document back in chunks a much more natural

affair, however, and I really like the ease of use for the client. Of course,

in reality you’d use a library like aiohttp to avoid

messing around with HTTP yourself.

Conclusions¶

That’s the end of this sequence of articles and we’re brought about bang up to date. Overall I really like the fact that the Python developers have focused on making coroutines a proper first-class concept within the language. The implementation is somewhat different to other languages, which often seem to try to hide the coroutines themselves and offer only futures as the language interface, but I do like knowing when my context switches are constrained to be — especially if I’m relying on this mechanism to avoid locking that would otherwise be required.

The syntax is nice and the paradigm is pleasant to work with — but are there any downsides? Well, because the implementation is based on generators under the hood I do have my concerns around raw performance. One of the benefits of asynchonrous IO should really be the performance boost and scalability vs. threads for dominantly IO-bound applications — while the scalability is probably there, I’m a little unconvinced about the performance for real-world cases.

I hunted around for some proper benchmarks but they see few and far

between. There’s this page which has a useful collection

of links, although it hasn’t been updated for almost a year — I guess things

are unlikely to have moved on significantly in that time. From looking over

these results it’s clear that asyncio and aiohttp aren’t the cutting edge

of performance, but then again they’re not terrible either.

When all’s said and done, if performance is the all-consuming overriding concern then you’re unlikely to be using Python anyway. If it’s important enough to warrant an impact on readability then you might want to at least investigate threads or gevent before making a decision. But if you’ve got what I would regard as a pretty typical set of concerns, where your readablity and maintainability are the top priority, even though you don’t want performance to suffer too much, then take a serious look at coroutines — with a bit of practice I think you might learn to love them.

Or maybe at least dislike them less than the other options.

-

I’m ignoring the fact that we’re also using subprocesses for concurrency in this example since it’s just an implementation detail of this particular case and not relevant to the point of safe access to data structures within the script. ↩