☑ That's Good Enough

- What’s New in Python 3.10 - Library Changes Fri Dec 09 2022

- What’s New in Python 3.10 - Other New Features Fri Nov 18 2022

- What’s New in Python 3.10 - Pattern Matching Sun Nov 06 2022

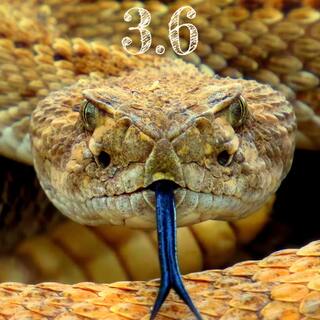

- What’s New in Python 3.9 - Library Changes Sat Nov 05 2022

- What’s New in Python 3.9 - New Features Thu Oct 27 2022

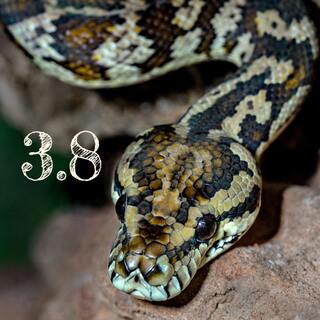

- What’s New in Python 3.8 - Library Changes Wed Jul 06 2022

- What’s New in Python 3.8 - New Features Fri Jun 10 2022

- What’s New in Python 3.7 - Library Updates Sun Jun 05 2022

- What’s New in Python 3.7 - New Features Wed Oct 27 2021

- What’s New in Python 3.6 - Module Updates Sun Aug 22 2021

December 2022

☑ What’s New in Python 3.10 - Library Changes

In this series looking at features introduced by every version of Python 3, we conclude our look at Python 3.10 by looking at updates to the standard library modules.

This is the 23rd of the 36 articles that currently make up the “Python 3 Releases” series, the first of which was What’s New in Python 3.0.

Read article ( 31 minutes )

November 2022

☑ What’s New in Python 3.10 - Other New Features

In this series looking at features introduced by every version of Python 3, we continue our look at Python 3.10, focusing on the new features in the language and library. In this post we’ll cover improved error reporting and debugging, new features for type hints, and a few other smaller language enhancements.

This is the 22nd of the 36 articles that currently make up the “Python 3 Releases” series, the first of which was What’s New in Python 3.0.

Read article ( 15 minutes )

☑ What’s New in Python 3.10 - Pattern Matching

In this series looking at features introduced by every version of Python 3, we take a first look at Python 3.10, focusing on the new features in the language and library. In this post we’ll cover the new structural pattern matching feature.

This is the 21st of the 36 articles that currently make up the “Python 3 Releases” series, the first of which was What’s New in Python 3.0.

Read article ( 11 minutes )

☑ What’s New in Python 3.9 - Library Changes

In this series looking at features introduced by every version of Python 3, we continue our look at Python 3.9 by going through the notable changes in the standard library. These include concurrency improvements with changes to asyncio, concurrent.futures, and multiprocessing; networking features with enhancements to ipaddress, imaplib, and socket; and some additional OS features in os and pathlib.

This is the 20th of the 36 articles that currently make up the “Python 3 Releases” series, the first of which was What’s New in Python 3.0.

Read article ( 15 minutes )

October 2022

☑ What’s New in Python 3.9 - New Features

In this series looking at features introduced by every version of Python 3, we move on to Python 3.9 and examine some of the major new features. These include type hinting generics in standard collections, string methods for stripping specified prefixes and suffixes from strings, extensions to function and variable annotations, and new modules for timezone information and topological sorting of graphs.

This is the 19th of the 36 articles that currently make up the “Python 3 Releases” series, the first of which was What’s New in Python 3.0.

Read article ( 26 minutes )

July 2022

☑ What’s New in Python 3.8 - Library Changes

In this series looking at features introduced by every version of Python 3, we continue our look at Python 3.8, examining changes to the standard library. These include some useful new functionality in functools, some new mathematical functions in math and statistics, some improvements for running servers on dual-stack hosts in asyncio and socket, and also a number of new features in typing.

This is the 18th of the 36 articles that currently make up the “Python 3 Releases” series, the first of which was What’s New in Python 3.0.

Read article ( 46 minutes )

June 2022

☑ What’s New in Python 3.8 - New Features

In this series looking at features introduced by every version of Python 3, we move on to Python 3.8 and see what new features have been added in this release. These features include assignment as an expression, position-only parameters and two new modules in the standard library.

This is the 17th of the 36 articles that currently make up the “Python 3 Releases” series, the first of which was What’s New in Python 3.0.

Read article ( 21 minutes )

☑ What’s New in Python 3.7 - Library Updates

In this series looking at features introduced by every version of Python 3, we complete our look at 3.7 by checking the changes in the standard library. These include three new modules, as well as changes across many other modules.

This is the 16th of the 36 articles that currently make up the “Python 3 Releases” series, the first of which was What’s New in Python 3.0.

Read article ( 40 minutes )

October 2021

☑ What’s New in Python 3.7 - New Features

In this series looking at features introduced by every version of Python 3, we begin to look at version 3.7 by seeing what major new features were added. These include several improvements to type annotations, some behaviour to cope with imperfect locale information, and a number of diagnostic improvements.

This is the 15th of the 36 articles that currently make up the “Python 3 Releases” series, the first of which was What’s New in Python 3.0.

Read article ( 18 minutes )

August 2021

☑ What’s New in Python 3.6 - Module Updates

In this series looking at features introduced by every version of Python 3, we finish our look at the updates in Python 3.6. This third and final article looks at the updates to library modules in this release. These include some asyncio improvements, new enumeration types and some new options for use with sockets and SSL.

This is the 14th of the 36 articles that currently make up the “Python 3 Releases” series, the first of which was What’s New in Python 3.0.

Read article ( 26 minutes )